This research paper is accepted at the ACCV conference in 2024 and also serves as master’s degree graduation thesis.

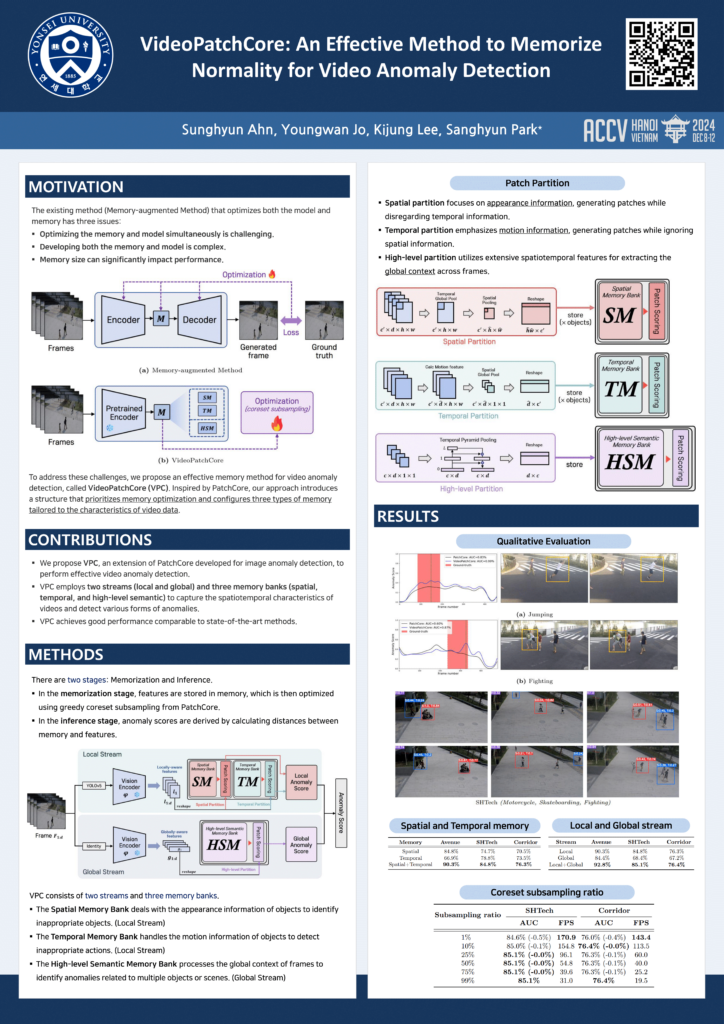

Title: VideoPatchCore: An Effective Method to Memorize Normality for Video Anomaly Detection

Authors: Sunghyun Ahn, Youngwan Jo, Kijung Lee, and Sanghyun Park†

Status: Accepted

Conference Information: 17th Asian Conference on Computer Vision [ACCV 2024]

Github page: [GitHub]

ArXiv page: [arXiv]

Computer Vision Foundation page: [CvF]

Abstract

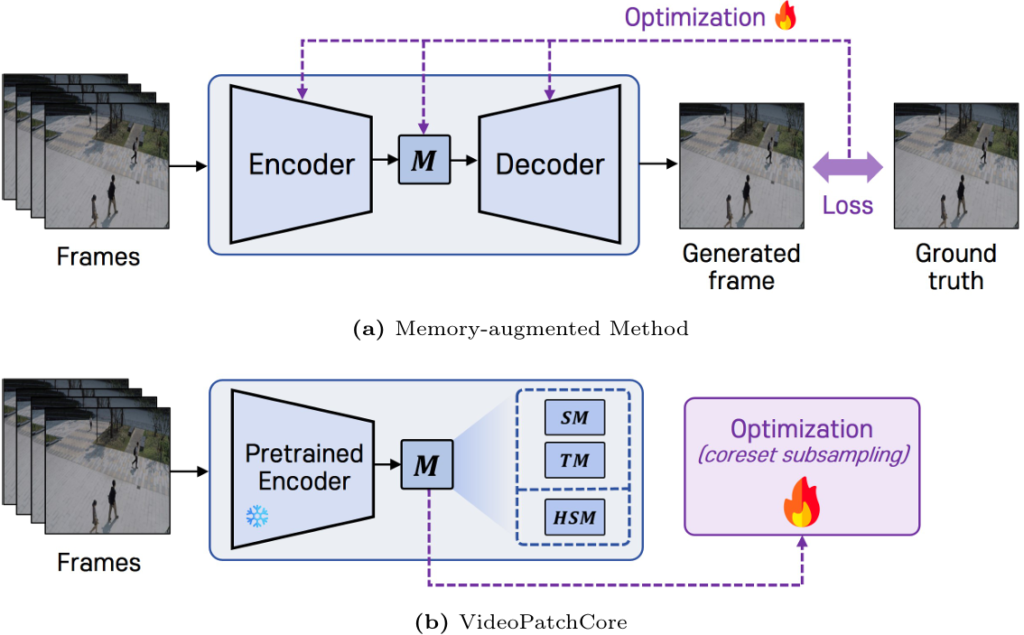

Video anomaly detection (VAD) is a crucial task in video analysis and surveillance within computer vision. Currently, VAD is gaining attention with memory techniques that store the features of normal frames. The stored features are utilized for frame reconstruction, identifying an abnormality when a significant difference exists between the reconstructed and input frames. However, this approach faces several challenges due to the simultaneous optimization required for both the memory and encoder-decoder model. These challenges include increased optimization difficulty, complexity of implementation, and performance variability depending on the memory size. To address these challenges,we propose an effective memory method for VAD, called VideoPatchCore. Inspired by PatchCore, our approach introduces a structure that prioritizes memory optimization and configures three types of memory tailored to the characteristics of video data. This method effectively addresses the limitations of existing memory-based methods, achieving good performance comparable to state-of-the-art methods. Furthermore, our method requires no training and is straightforward to implement, making VAD tasks more accessible.

Poster (ACCV 2024)

Demo

1. A video of an abnormal event where a person is running on campus.

2. A video of an abnormal event where a person is jumping on campus.

3. A video of an abnormal event where a person is throwing a bag on campus.

4. A video of an abnormal event where a person is walking in the wrong direction on campus.

Presentation (M.S. Thesis)